Flux AI vs MidJourney: The Battle for Artistic Freedom

In the evolving landscape of AI-powered art generation, two platforms, Flux AI and MidJourney, have emerged as key players, each with distinct philosophies and functionalities. Flux AI, developed by ex-Stability AI members, champions a censorship-free environment, allowing artists to explore their creativity without restrictions. This open-source platform offers three models—Schnell, Dev, and Pro—catering to various user needs, from quick image generation to high-quality outputs. In contrast, MidJourney is known for its polished visuals but imposes strict content guidelines, limiting the types of images that can be created, particularly those that are violent, explicit, or politically charged.

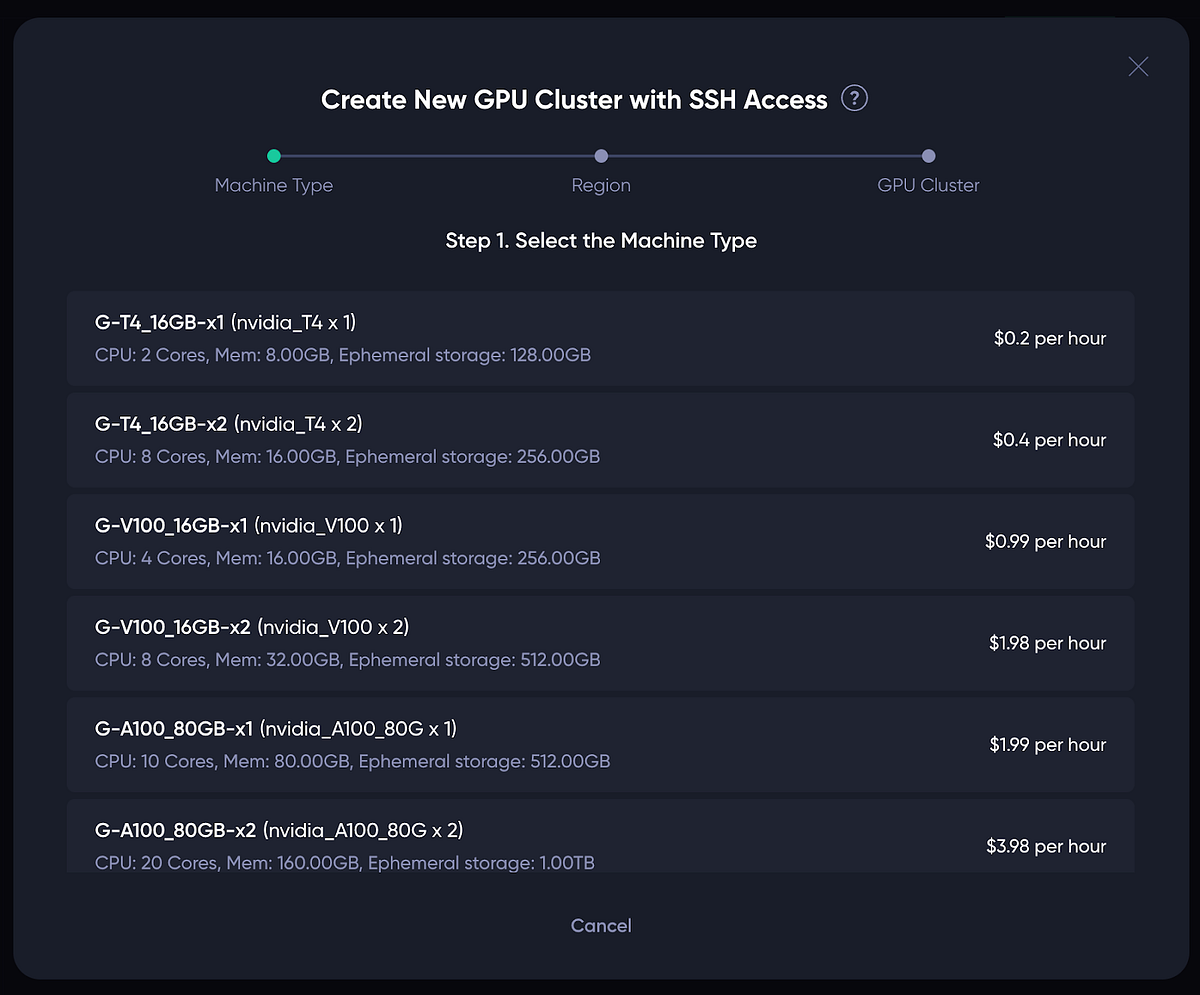

The recent upgrade to MidJourney’s v6.1 algorithm has enhanced its image quality and coherence, addressing previous issues like the notorious “weird hand” problem. However, despite these improvements, the platform’s stringent censorship policies remain a significant drawback, potentially stifling artistic expression. On the other hand, Flux AI’s no-censorship policy empowers creators to tackle complex themes and push artistic boundaries, making it a compelling choice for those seeking creative freedom. The pricing models further highlight the differences, with Flux AI being free for users who run it on their hardware, while MidJourney operates on a subscription basis, starting at $10 per month.

Ultimately, the choice between Flux AI and MidJourney boils down to individual priorities. For artists who prioritize convenience and high-quality visuals, MidJourney may be the preferred option. However, for those who value unrestricted creative expression and the ability to explore any subject matter, Flux AI stands out as the clear winner. As the debate over censorship and artistic freedom continues, these platforms represent a broader cultural movement advocating for the right to create without limitations.

Related News