Theta EdgeCloud Launches GPU Clusters for Enhanced AI Model Training

Theta EdgeCloud has introduced a significant enhancement by enabling users to launch GPU clusters, which are essential for training large AI models. This new feature allows the creation of clusters composed of multiple GPU nodes of the same type within a specific region, facilitating direct communication among nodes with minimal latency. This capability is crucial for distributed AI model training, as it allows for parallel processing across devices. Consequently, tasks that traditionally required days or weeks to complete on a single GPU can now be accomplished in hours or even minutes, significantly accelerating the development cycle for AI applications.

The introduction of GPU clusters not only enhances training efficiency but also supports horizontal scaling, allowing users to dynamically add more GPUs as needed. This flexibility is particularly beneficial for training large foundation models or multi-billion parameter architectures that exceed the memory capacity of a single GPU. The demand for this feature has been voiced by numerous EdgeCloud customers, including leading AI research institutions, highlighting its importance in the ongoing evolution of Theta EdgeCloud as a premier decentralized cloud platform for AI, media, and entertainment.

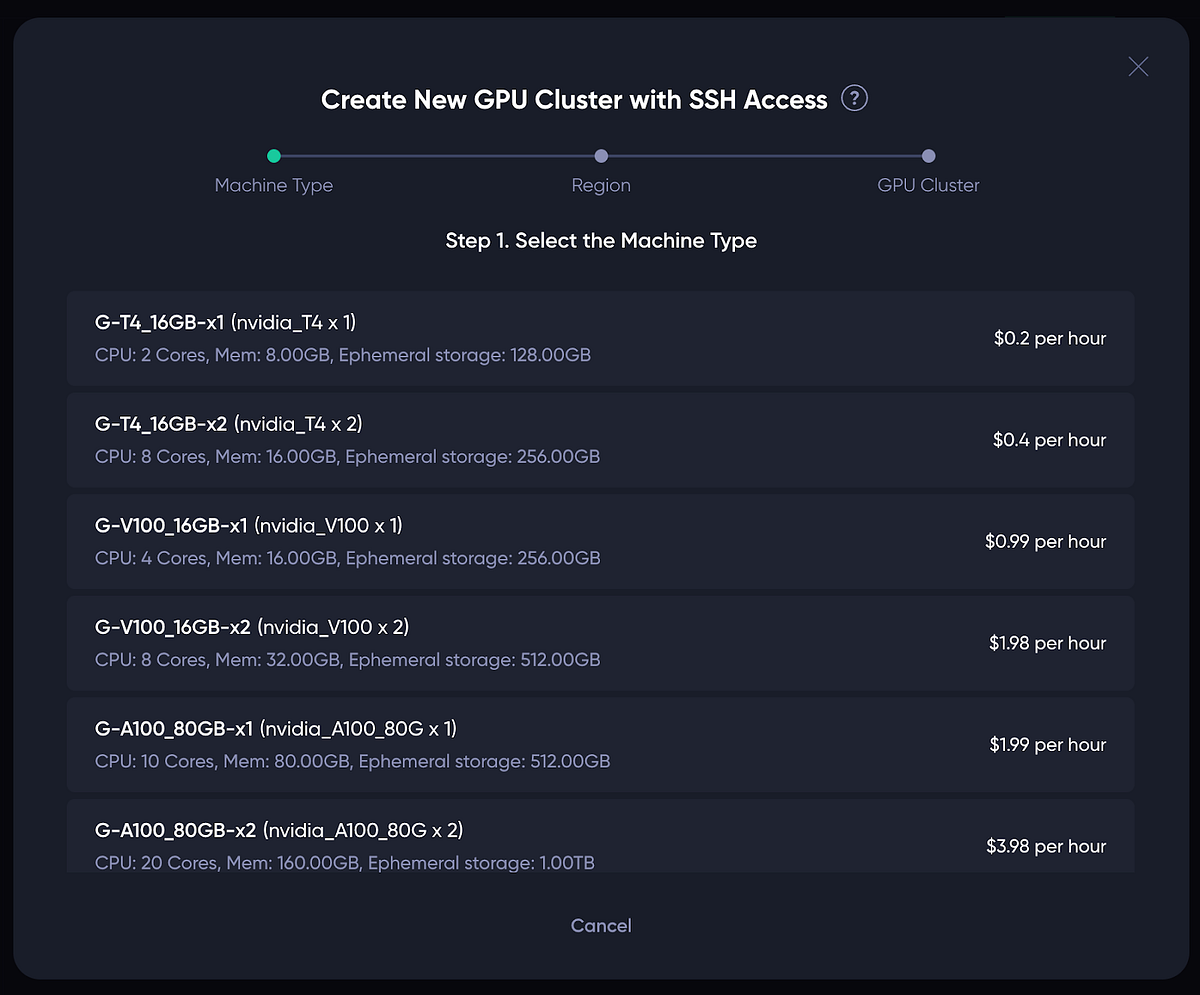

To get started with GPU clusters on Theta EdgeCloud, users can follow a straightforward three-step process. This includes selecting the machine type, choosing the region, and configuring the cluster settings such as size and container image. Once the cluster is created, users can SSH into the GPU nodes, enabling them to execute distributed tasks efficiently. Additionally, the platform allows for real-time scaling of the GPU cluster, ensuring that users can adapt to changing workloads seamlessly. Overall, this new feature positions Theta EdgeCloud as a competitive player in the decentralized cloud space, particularly for AI-driven applications.

Related News